UPDATE: I have fixed the problem described below.

I had to do some reading on the GVR SDK for Unity in order to see what was going wrong. After being unable to use the method below to switch videos, I started to wonder if I could destroy the current video player texture and replace it with a new one. Reading through the documentation showed me that the CleanupVideo() and ReinitializeVideo() methods already do this.

The problem I had was I wrote a function to switch the video URL, cleanup the video (which will delete the prior video) and reinitialized the video (which will send the new URL to the GVR Video Texture). Then I attempted to play the video and, as described below, the video stops at the first frame. The problem is described in the documentation link below:

Because initializing the player and loading the video stream are asynchronous operations, it can be useful to have a callback when the video is ready. This is an alternative to polling the VideoReady property in the Update method. You can register a callback to be called when the video is ready by invokingGvrVideoTexturePlayer.OnVideoEventCallBack().

As it turns out, I was asking the video to play before the video was ready (VideoReady = true). I fixed this problem by letting the code hang until the video actually becomes ready: while(VideoReady != true); The hanging is not palpable to the user so this seems like a good solution to the problem (I may be able to get the amount of time through the developer tools on the Android).

This resolves the problem and now the video sources can be switched via C# script without switching scenes. I also learned to use expansion files and access the videos within the .OBB file through GVR SDK’s documentation. This will be tremendously important for us, as having multiple video clips without use of expansion files could put us way over the apk size limit of 100 MB (some videos, uncompressed, are in the ~10-30 MB range).

—————————————————————————————————————————————

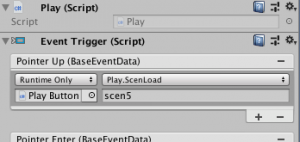

I have been working on getting our video stream to switch on a button click instead of creating a new scene with a new video. Too many scenes can increase the size of our game, which is undesirable.

I have been working with the GVR Video Player Texture. There is supposedly an easy way to switch the video stream, but I have not had success so far. I have successfully changed the URL through which the video plays, but the video tends to freeze on the first frame once the user presses the button. In essence, the video does change; it just doesn’t play. That is not desirable. Google VR has some documentation on swapping the video streaming source here: https://developers.google.com/vr/develop/unity/video-overview.

I will keep working on fixing this problem, as well as explore the potential of using Unity’s built-in video player. (I have used Unity’s built in video player with Daydream, and it does work.)