January 23, 2019

To program the MSP430 on the Schmartboard, you need to be able to flash the chip and need hardware to do that. There are pieces of hardware (such as MSP-FET) which are expensive and are more suited for industrial/business applications. These tools can use 4-wire JTAG to flash the chip. However, you can flash the MSP430 from the Launchpad itself by using Spy-By-Wire (SBW) which TI sometimes calls 2-wire JTAG. 4-wire JTAG is faster than 2-wire JTAG, but obviously requires more pins on the chip (a disadvantage) and unless you are flashing hundreds of chips, the additional time SBW takes isn’t palpable.

SBW requires the following two signals in addition to making sure the target chip is powered:

- Pin 28– TEST/SBWCLK

- Pin 29– ~RST/NMI/SBWTDIO

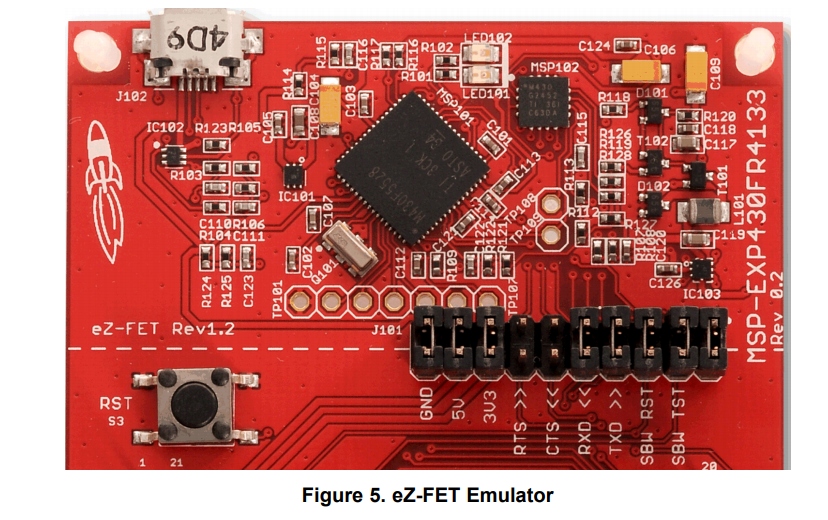

The MSP-EXP430FR6989 Launchpad has an eZ-FET emulator on the top portion of the Launchpad.

Figure 1 – eZ-FET (Picture from slau627a)

The Launchpad ships with jumpers that connect the on-board eZ-FET to the on-board chip. By removing the jumpers from GND, 3V3, SBWTDIO and SBWCLK and connecting these wires to the MSP430 pins on the Schmartboard, you can flash the chip. The Launchpad user’s guide (slau627a) describes further how to use eZ-FET to program an external target. However, the MSP Debugger’s Guide (slau647) has more in-depth details about using SBW from the eZ-FET. Some important things to note:

- pg. 7 — The target chip must be powered from the eZ-FET emulator. Of course, you can connect the target to an external power supply once you have finished programming it. But when you are programming through SBW, make sure you use the power supply from the Launchpad.

- pg. 40 – The LED signals on the eZ-FET can indicate problems. When both LEDs are off, the eZ-FET has become disconnected from your computer (could happen if a firmware update was unsuccessful). When only the red LED is on, the target board’s power connections are probably wrong.

- On the MSP-EXP430FR6989 Launchpad, the wires to the target must be connected from the eZ-FET side, not the on-board chip side. This may seem obvious, but it is easy to accidentally connect to the wrong side.

- When looking up error codes online in the TI forums, some people reference putting capacitors and pullup resistors on the SBWTDIO and/or SBWCLK lines. However, those posts usually are using 2-wire JTAG on a tool like MSP-FET. If you look at the hardware section of the Launchpad User’s guide (pg. 32), you can see the eZ-FET circuitry has the necessary pullups and capacitors built in already. Adding additional capacitors and pullups can cause problems.

- There are no settings you need to change in Code Composer Studio to program an external chip versus the Launchpad’s on-board chip. Since the on-board chip is programmed with SBW anyways, and you are simply removing the jumpers to the on-board chip and connecting the pins to an external chip, there are no target configuration settings you have to change if you are programming an MSP430FR6989.

There are a few errors I encountered while using SBW, but I did learn from the errors and figured out why they occurred. Some of them include:

- Unknown device. — Your chip may not be powered properly. Also, your SBW connections may be wrong. (Make sure you don’t reverse SBWTDIO and SBWCLK, and make sure you connect those signals from the eZ-FET side). Bad soldering on the SBW pins could also cause this problem.

- Could not set target VCC. — Your chip may not be powered properly. If you were messing with settings in Target Configurations in Code Composer Studio, it is also possible you accidentally changed the target VCC to something other than 3300 mV (the recommended VCC for this chip).

- Trouble Writing Memory Block at…. — Your chip is probably not powered properly (make sure you use decoupling capacitors!). Double check your connections and make sure your jumper wires are good (if you are using them).

As you can see, the majority of errors with SBW occur because the device isn’t being powered correctly! Make sure you follow TI’s recommendations for powering the device. That includes proper decoupling capacitors between the AVCC/AVSS and DVCC/DVSS pins. I noticed that before I added the decoupling capacitors between those pins, I would get the Trouble Writing Memory Block at…. error more often, but operation was still pretty reliable.

If you want to try an alternative to Code Composer Studio to see if your target board can be recognized, TI Tools has UniFlash which should be able to identify your chip (assuming it is powered correctly).