April 4, 2019

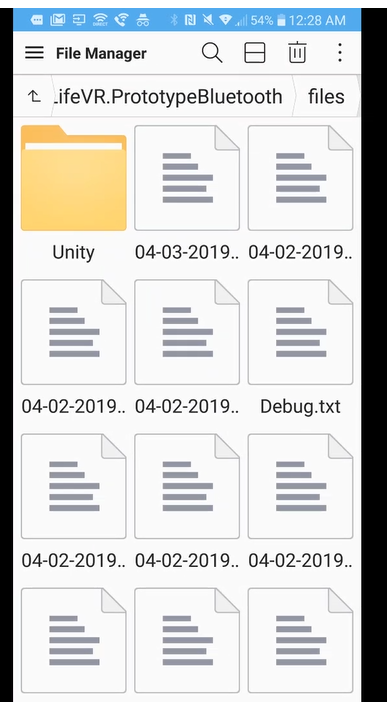

When we had a single crash in our first focus group, I didn’t think much of it. But when we had 3 crashes in our second focus group, something was definitely wrong. I had done preliminary testing but nothing that had showed frequent crashes so I had to think of the best way to crash the game. After adding a bunch of log files into the code and clicking hotspots as fast as possible until it crashed, I found that it was crashing in code I hadn’t even written. Google provided code for displaying 360 video and we were using that to switch between the multiple videos we recorded.

After enabling some developer options in the daydream app, I monitored the resources that the game was using. What I found was that each time a video switched, more ram was being acquired but nothing was being released. This caused the memory to shoot from 200 Mb of default running to 500+ Mb, which is much larger than an app like this is allowed to run on. Each of our video files was about 20 Mb of data, which is the amount the ram jumped each time a video was switching. The provided 360 video code was not cleaning up the resources it was using.

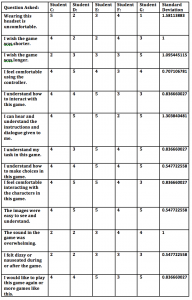

Since the first focus group involved playing the game in an easy difficulty, the students were able to go through the game without having to switch between videos more than what the game was able to do. The second group, however, was playing a much more difficult version. This meant that they used more video switches and played the game longer, which eventually used up the resources that the game could use.

Fixing this issue was going to be a challenge. Since the game is run through unity which used C# as its language of choice, I had limited access to resource management. C# uses its own “garbage collection,” whereas plain C would force me to allocated and free all the memory I wanted to use. I needed to figure out a way to force the script to clear its memory before switching videos without impacting performance. Luckily I knew from previous experiences that disabling an object that had a script attached to it would cause all the running variables in that script to be deleted.

My first approach was to disable then re-enable the video player object right before playing a video, but this caused more frequent crashing. My mistake was that disabling and re-enabling the object took a long time to actually clear the memory and start a new instance. When the game would try to run the script attached to video player, it would be trying to run a disabled script which would in turn crash the game.

My second and final approach was to space out the disable and re-enable. By disabling the video player right after it left the code that signified that the video was done playing and then re-enabling the video player before it had to actually use the methods, it was given enough time to flush the memory of the video player and start a new instance before a new video started playing.

To make sure this was in fact the correct solution, I compiled the game and loaded it on the phone with the developer options enabled. I was able to verify that the memory was staying at a steady 200 Mb and not increasing linearly with each switch of the video. Once that was verified, I needed to do a stress test. Before the game was only able to run around 10-15 videos before it crashed, the stress test I did preformed 100 video switches and it still had no signs of crashing or slowing down. To make sure it would continue to work I left it running for 24-hours and then tried to do another 50 video switches while checking the memory. All was good and the game didn’t crash. The next day I ran through another 100 video switches and did not experience a crash again.